*/

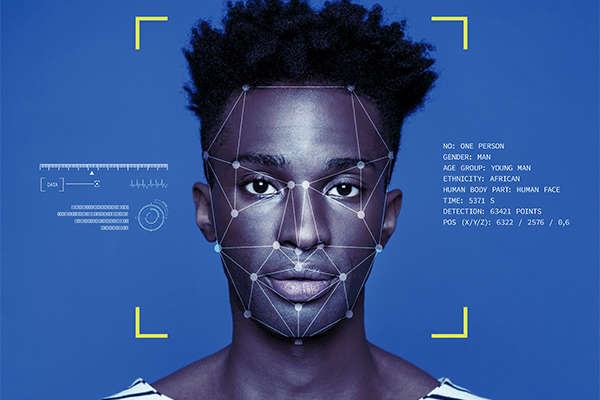

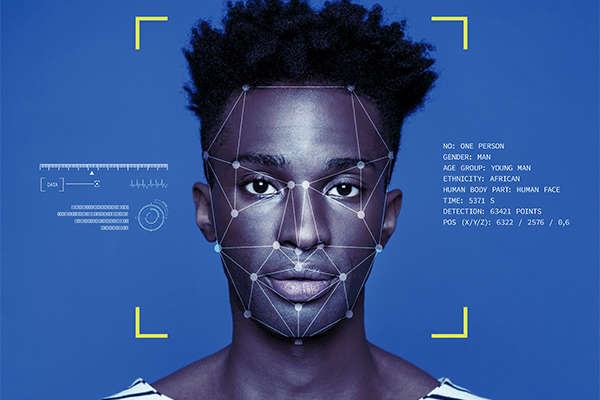

Beyond the intrusiveness of automated facial recognition is concern about ‘false positives’ and potential for racial bias in the technology

By Sailesh Mehta and Shahid Khan

The Automated Facial Recognition genie has long been out of the bottle. The question is whether it can be controlled. Police forces and secret services have been using the technology for some time. Its backers hail it as an unbiased tool for catching criminals, finding missing persons and protecting the public, particularly against terrorists. Civil liberty groups warn of the chilling effect such technology has already had on the right to privacy, the freedom of movement and speech – and its potential for abuse. It can be used to track every movement of an individual in real time, and to keep tabs on their network of connections. The integrated use of the technology to connect it with cars driven, phone calls made, and items purchased, are reminiscent of mass surveillance in the totalitarian state depicted in Orwell’s 1984.

An aspect of automated facial recognition (AFR) was tested in the Court of Appeal recently in R (on the application of Edward Bridges) v Chief Constable of South Wales Police [2020] EWCA Civ 1058. The case concerned the use of facial recognition technology by the South Wales Police in a pilot project. Cameras would capture images of individuals attending concerts or football matches from afar, biometric data extracted from the photos and an algorithmic comparison made with data of faces on a watchlist database. Fifty faces per second can be scanned by the system. If there is no match, then the facial biometric data of the individual is immediately destroyed. If there is a positive match, then the system requires a police officer and another person to make a decision to stop the individual which may lead to a possible arrest. Edward Bridges challenged the lawfulness of the use of the technology. Beyond the intrusiveness of such surveillance, is concern about ‘false positives’ – incorrect identifications by the algorithms used in the automated process.

The case was heard in the context of growing international evidence of the potential for misuse of the technology and its potential for bias. Late last year, the National Institute of Standards and Technology (NIST), an American organisation, carried out an evaluation of 189 software algorithms from 99 developers – a majority of the industry. The tests showed a worrying range of inaccuracy – with higher rates of false positives for Asian and African-American faces relative to images of Caucasians. The differentials often ranged from a factor of 10 to 100 times. There were also worryingly high levels of differentials for African American women’s faces. The authors of the study understood that false positives, when one face is being compared to a large database, could lead to a person wrongly being put on a watch list or even arrested. Further, that the higher rates of error for Black and Asian faces were a function of the ‘training’ algorithm used by the commercial programmes which educates the technology about facial recognition. But it was difficult, if not impossible, to delve into the ‘training’ algorithms because such matters were closely guarded commercial secrets. The author of the study stated that they had ‘found empirical evidence for the existence of demographic differentials in the majority of the face recognition algorithms studied’. They had found an inherent racial bias in the algorithms. The study found that the best way to reduce this bias was to use more diverse data to train the algorithms.

In Bridges, the Court of Appeal found that there was a breach of Article 8 of the European Convention on Human Rights – the right to respect for private and family life. Any interference with this right must be in accordance with the law. It said interference with the Article 8 right was not in accordance with the law in that (i) it is not clear who can be placed on the watchlist – the discretion was too wide – and (ii) there do not appear to be any criteria for determining where the cameras could be deployed.

The court also found a breach of the Public Sector Equality Duty (PSED) as set out in s 149(1) of the Equality Act 2010. The police had never sought to satisfy themselves that the software program did not have an unacceptable bias on grounds of race or sex. Expert evidence before the court suggested that algorithms could have such a bias. As a result, the police had not done all that they reasonably could to fulfil the duty to ensure equality.

Thus, the court has temporarily stopped the use of this technology. It will resume as soon as the police set up a series of checks and balances which satisfy the PSED and the discretion as to where to place the cameras and who should be on the watchlist is better regulated. The genie is only taking a momentary rest.

The Automated Facial Recognition genie has long been out of the bottle. The question is whether it can be controlled. Police forces and secret services have been using the technology for some time. Its backers hail it as an unbiased tool for catching criminals, finding missing persons and protecting the public, particularly against terrorists. Civil liberty groups warn of the chilling effect such technology has already had on the right to privacy, the freedom of movement and speech – and its potential for abuse. It can be used to track every movement of an individual in real time, and to keep tabs on their network of connections. The integrated use of the technology to connect it with cars driven, phone calls made, and items purchased, are reminiscent of mass surveillance in the totalitarian state depicted in Orwell’s 1984.

An aspect of automated facial recognition (AFR) was tested in the Court of Appeal recently in R (on the application of Edward Bridges) v Chief Constable of South Wales Police [2020] EWCA Civ 1058. The case concerned the use of facial recognition technology by the South Wales Police in a pilot project. Cameras would capture images of individuals attending concerts or football matches from afar, biometric data extracted from the photos and an algorithmic comparison made with data of faces on a watchlist database. Fifty faces per second can be scanned by the system. If there is no match, then the facial biometric data of the individual is immediately destroyed. If there is a positive match, then the system requires a police officer and another person to make a decision to stop the individual which may lead to a possible arrest. Edward Bridges challenged the lawfulness of the use of the technology. Beyond the intrusiveness of such surveillance, is concern about ‘false positives’ – incorrect identifications by the algorithms used in the automated process.

The case was heard in the context of growing international evidence of the potential for misuse of the technology and its potential for bias. Late last year, the National Institute of Standards and Technology (NIST), an American organisation, carried out an evaluation of 189 software algorithms from 99 developers – a majority of the industry. The tests showed a worrying range of inaccuracy – with higher rates of false positives for Asian and African-American faces relative to images of Caucasians. The differentials often ranged from a factor of 10 to 100 times. There were also worryingly high levels of differentials for African American women’s faces. The authors of the study understood that false positives, when one face is being compared to a large database, could lead to a person wrongly being put on a watch list or even arrested. Further, that the higher rates of error for Black and Asian faces were a function of the ‘training’ algorithm used by the commercial programmes which educates the technology about facial recognition. But it was difficult, if not impossible, to delve into the ‘training’ algorithms because such matters were closely guarded commercial secrets. The author of the study stated that they had ‘found empirical evidence for the existence of demographic differentials in the majority of the face recognition algorithms studied’. They had found an inherent racial bias in the algorithms. The study found that the best way to reduce this bias was to use more diverse data to train the algorithms.

In Bridges, the Court of Appeal found that there was a breach of Article 8 of the European Convention on Human Rights – the right to respect for private and family life. Any interference with this right must be in accordance with the law. It said interference with the Article 8 right was not in accordance with the law in that (i) it is not clear who can be placed on the watchlist – the discretion was too wide – and (ii) there do not appear to be any criteria for determining where the cameras could be deployed.

The court also found a breach of the Public Sector Equality Duty (PSED) as set out in s 149(1) of the Equality Act 2010. The police had never sought to satisfy themselves that the software program did not have an unacceptable bias on grounds of race or sex. Expert evidence before the court suggested that algorithms could have such a bias. As a result, the police had not done all that they reasonably could to fulfil the duty to ensure equality.

Thus, the court has temporarily stopped the use of this technology. It will resume as soon as the police set up a series of checks and balances which satisfy the PSED and the discretion as to where to place the cameras and who should be on the watchlist is better regulated. The genie is only taking a momentary rest.

Beyond the intrusiveness of automated facial recognition is concern about ‘false positives’ and potential for racial bias in the technology

By Sailesh Mehta and Shahid Khan

Kirsty Brimelow KC, Chair of the Bar, sets our course for 2026

What meaningful steps can you take in 2026 to advance your legal career? asks Thomas Cowan of St Pauls Chambers

Marie Law, Director of Toxicology at AlphaBiolabs, explains why drugs may appear in test results, despite the donor denying use of them

Asks Louise Crush of Westgate Wealth Management

AlphaBiolabs has donated £500 to The Christie Charity through its Giving Back initiative, helping to support cancer care, treatment and research across Greater Manchester, Cheshire and further afield

Q and A with criminal barrister Nick Murphy, who moved to New Park Court Chambers on the North Eastern Circuit in search of a better work-life balance

The appointments of 96 new King’s Counsel (also known as silk) are announced today

With pupillage application season under way, Laura Wright reflects on her route to ‘tech barrister’ and offers advice for those aiming at a career at the Bar

Jury-less trial proposals threaten fairness, legitimacy and democracy without ending the backlog, writes Professor Cheryl Thomas KC (Hon), the UK’s leading expert on juries, judges and courts

Are you ready for the new way to do tax returns? David Southern KC explains the biggest change since HMRC launched self-assessment more than 30 years ago... and its impact on the Bar

Marking one year since a Bar disciplinary tribunal dismissed all charges against her, Dr Charlotte Proudman discusses the experience, her formative years and next steps. Interview by Anthony Inglese CB